Instruction Set Architecture

Triton VM is a stack machine with RAM. It is a Harvard architecture with read-only memory for the program. The arithmetization of the VM is defined over the B-field where is the Oxfoi prime, i.e., .1 This means the registers and memory elements take values from , and the transition function gives rise to low-degree transition verification polynomials from the ring of multivariate polynomials over .

At certain points in the course of arithmetization we need an extension field over to ensure sufficient soundness. To this end we use , i.e., the quotient ring of remainders of polynomials after division by the Shah polynomial, . We refer to this field as the X-field for short, and its elements as X-field elements.

Instructions have variable width:

they either consist of one word, i.e., one B-field element, or of two words, i.e., two B-field elements.

An example for a single-word instruction is add, adding the two elements on top of the stack, leaving the result as the new top of the stack.

An example for a double-word instruction is push + arg, pushing arg to the stack.

Triton VM has two interfaces for data input, one for public and one for secret data, and one interface for data output, whose data is always public. The public interfaces differ from the private one, especially regarding their arithmetization.

-

The name “Oxfoi” comes from the prime’s hexadecimal representation

0xffffffff00000001. ↩

Data Structures

Memory

The term memory refers to a data structure that gives read access (and possibly write access, too) to elements indicated by an address. Regardless of the data structure, the address lives in the B-field. There are four separate notions of memory:

- Program Memory, from which the VM reads instructions.

- Op Stack Memory, which stores the operational stack.

- RAM, to which the VM can read and write field elements.

- Jump Stack Memory, which stores the entire jump stack.

Program Memory

Program memory holds the instructions of the program currently executed by Triton VM. It is immutable.

Operational Stack

The stack is a last-in;first-out data structure that allows the program to store intermediate variables, pass arguments, and keep pointers to objects held in RAM. In this document, the operational stack is either referred to as just “stack” or, if more clarity is desired, “op stack.”

From the Virtual Machine’s point of view, the stack is a single, continuous object. The first 16 elements of the stack can be accessed very conveniently. Elements deeper in the stack require removing some of the higher elements, possibly after storing them in RAM.

For reasons of arithmetization, the stack is actually split into two distinct parts:

- the operational stack registers

st0throughst15, and - the Op Stack Underflow Memory.

The motivation and the interplay between the two parts is described and exemplified in arithmetization of the Op Stack table.

Random Access Memory

Triton VM has dedicated Random Access Memory.

It can hold up to many base field elements, where is the Oxfoi prime1.

Programs can read from and write to RAM using instructions read_mem

and write_mem.

The initial RAM is determined by the entity running Triton VM. Populating RAM this way can be beneficial for a program’s execution and proving time, especially if substantial amounts of data from the input streams needs to be persisted in RAM. This initialization is one form of secret input, and one of two mechanisms that make Triton VM a non-deterministic virtual machine. The other mechanism is dedicated instructions.

Jump Stack

Another last-in;first-out data structure, similar to the op stack.

The jump stack keeps track of return and destination addresses.

It changes only when control follows a call or

return instruction, and might change through the

recurse_or_return instruction.

Furthermore, executing instructions return,

recurse, and recurse_or_return

require a non-empty jump stack.

-

Of course, the machine running Triton VM might have stricter limitations: storing or accessing bits EiB of data is a non-trivial engineering feat. ↩

Registers

This section covers all registers. Only a subset of these registers relate to the instruction set; the remaining registers exist only to enable an efficient arithmetization and are marked with an asterisk (*). Each register directly corresponds to one column in the Processor Table.

| Register | Name | Purpose |

|---|---|---|

*clk | cycle counter | counts the number of cycles the program has been running for |

*IsPadding | padding indicator | indicates whether current state is only recorded to improve on STARK’s computational runtime |

ip | instruction pointer | contains the memory address (in Program Memory) of the instruction |

ci | current instruction | contains the current instruction |

nia | next instruction (or argument) | contains either the instruction at the next address in Program Memory, or the argument for the current instruction |

*ib0 through ib6 | instruction bit | decomposition of the instruction’s opcode used to keep the AIR degree low |

jsp | jump stack pointer | contains the memory address (in jump stack memory) of the top of the jump stack |

jso | jump stack origin | contains the value of the instruction pointer of the last call |

jsd | jump stack destination | contains the argument of the last call |

st0 through st15 | operational stack registers | contain explicit operational stack values |

*op_stack_pointer | operational stack pointer | the current size of the operational stack |

*hv0 through hv5 | helper variable registers | helper variables for some arithmetic operations |

*cjd_mul | clock jump difference lookup multiplicity | multiplicity with which the current clk is looked up by the Op Stack Table, RAM Table, and Jump Stack Table |

Instruction

Register ip, the instruction pointer, contains the address of the current instruction in

Program Memory.

The opcode of the current instruction is contained in

the register current instruction, or ci.

Register next instruction (or argument), or nia, either contains the next instruction or,

if it has one, the argument of the current instruction.

For reasons of arithmetization, ci is decomposed, giving rise to the instruction bit registers,

labeled ib0 through ib6.

Stack

The stack is represented by 16 registers called stack registers (st0 – st15) plus the op stack underflow memory.

The top 16 elements of the op stack are directly accessible, the remainder of the op stack, i.e, the part held in op stack underflow memory, is not.

In order to access elements of the op stack held in op stack underflow memory, the stack has to shrink by discarding elements from the top – potentially after writing them to RAM – thus moving lower elements into the stack registers.

For reasons of arithmetization, the stack always contains a minimum of 16 elements. Trying to run an instruction which would result in a stack of smaller total length than 16 crashes the VM.

Stack elements st0 through st10 are initially 0.

Stack elements st11 through st15, i.e., the very bottom of the stack, are initialized with the hash digest of the program that is being executed.

See the mechanics of program attestation for further explanations on stack initialization.

The register op_stack_pointer is not directly accessible by the program running in TritonVM.

It exists only to allow efficient arithmetization.

Helper Variables

Some instructions require helper variables in order to generate an efficient arithmetization.

To this end, there are 6 helper variable registers, labeled hv0 through hv5.

These registers are part of the arithmetization of the architecture, but not needed to define the instruction set.

Because they are only needed for some instructions, the helper variables are not generally defined.

For instruction group decompose_arg and instructions

skiz,

split,

eq,

merkle_step,

merkle_step_mem,

xx_dot_step, and

xb_dot_step,

the behavior is defined in the respective sections.

About Instructions

This section describes some general properties and behaviors of Triton VM’s instructions. The next section lists and describes all the instructions that Triton VM can execute.

Instruction Sizes

Most instructions are contained within a single, parameterless machine word.

They are considered single-word instructions.

An example of a single-word instruction is halt.

Some instructions take one machine word as argument and are so considered double-word instructions.

They are recognized by the form “instr + arg”.

An example of a double-word instruction is push + a, which takes immediate argument a.

Automatic Instruction Pointer Increment

Unless a different behavior is indicated, instructions increment the

instruction pointer ip by their size.

For example, instruction halt increments the ip by 1.

Instruction push increments the ip by 2.

Many control flow instructions manipulate the instruction pointer in

a manner deviating from this general scheme.

Non-Deterministic Instructions

Instructions divine and merkle_step

make Triton a virtual machine that can execute non-deterministic programs.

As programs go, this concept is somewhat unusual and benefits from additional explanation.

From the perspective of the program, the instruction divine makes some n elements magically

appear on the stack. It is not at all specified what those elements are, but generally speaking,

they have to be exactly correct, else execution fails. Hence, from the perspective of the program,

it just non-deterministically guesses the correct values in a moment of divine clarity.

Looking at the entire system, consisting of the VM, the program, and all inputs – both public and secret – execution is deterministic: the divined values were supplied as secret input and are read from there.

Hashing and Sponge Instructions

The instructions sponge_init,

sponge_absorb,

sponge_absorb_mem, and

sponge_squeeze are the interface

for using the Tip5 permutation in a

Sponge construction.

The capacity is never accessible to the program that’s being executed by Triton VM.

At any given time, at most one Sponge state exists.

In particular, Triton VM does not start with an initialized Sponge state.

If a program uses the Sponge state, the first Sponge instruction must be sponge_init;

otherwise, Triton VM will crash.

Only instruction sponge_init resets the state of the Sponge, and only the three Sponge

instructions influence the Sponge’s state.

Notably, executing instruction hash does not modify the Sponge’s state.

When using the Sponge instructions, it is the programmer’s responsibility to take care of proper input padding: Triton VM cannot know the number of elements that will be absorbed. For more information, see section 2.5 of the Tip5 paper.

Regarding Opcodes

An instruction’s operation code, or opcode, is the machine word uniquely identifying the instruction. For reasons of efficient arithmetization, certain properties of the instruction are encoded in the opcode. Concretely, interpreting the field element in standard representation:

- for all double-word instructions, the least significant bit is 1.

- for all instructions shrinking the operational stack, the second-to-least significant bit is 1.

- for all u32 instructions, the third-to-least significant bit is 1.

The first property is used by instruction skiz (see also its arithmetization). The second property helps with proving consistency of the Op Stack. The third property allows efficient arithmetization of the running product for the Permutation Argument between Processor Table and U32 Table.

Instructions

Triton VM’s instructions are (loosely and informally) grouped into the following categories:

- Stack Manipulation

- Control Flow

- Memory Access

- Hashing

- Base Field Arithmetic

- Bitwise Arithmetic

- Extension Field Arithmetic

- Input/Output

- Many-In-One

The following table is a summary of all instructions. For more details, read on below.

| Instruction | Description |

|---|---|

push + a | Push a onto the stack. |

pop + n | Pop the n top elements from the stack. |

divine + n | Push n non-deterministic elements to the stack. |

pick + i | Move stack element i to the top of the stack. |

place + i | Move the top of the stack to the position i. |

dup + i | Duplicate stack element i onto the stack. |

swap + i | Swap stack element i with the top of the stack. |

halt | Indicate graceful shutdown of the VM. |

nop | Do nothing. |

skiz | Conditionally skip the next instruction. |

call + d | Continue execution at address d. |

return | Return to the last call-site. |

recurse | Continue execution at the location last called. |

recurse_or_return | Either recurse or return. |

assert | Assert that the top of the stack is 1. |

read_mem + n | Read n elements from RAM. |

write_mem + n | Write n elements to RAM. |

hash | Hash the top of the stack. |

assert_vector | Assert equivalence of the two top quintuples. |

sponge_init | Initialize the Sponge state. |

sponge_absorb | Absorb the top of the stack into the Sponge state. |

sponge_absorb_mem | Absorb from RAM into the Sponge state. |

sponge_squeeze | Squeeze the Sponge state onto the stack. |

add | Add two base field elements. |

addi + a | Add a to the top of the stack. |

mul | Multiply two base field elements. |

invert | Base-field reciprocal of the top of the stack. |

eq | Compare the top two stack elements for equality. |

split | Split the top of the stack into 32-bit words. |

lt | Compare two elements for “less than”. |

and | Bitwise “and”. |

xor | Bitwise “xor”. |

log_2_floor | The log₂ of the top of the stack, rounded down. |

pow | The top of the stack to the power of its runner-up. |

div_mod | Division with remainder. |

pop_count | The hamming weight of the top of the stack. |

xx_add | Add two extension field elements. |

xx_mul | Multiply two extension field elements. |

x_invert | Extension-field reciprocal of the top of the stack. |

xb_mul | Multiply elements from the extension and base field. |

read_io + n | Read n elements from standard input. |

write_io + n | Write n elements to standard output. |

merkle_step | Helps traversing a Merkle tree using secret input. |

merkle_step_mem | Helps traversing a Merkle tree using RAM. |

xx_dot_step | Helps computing an extension field dot product. |

xb_dot_step | Helps computing a mixed-field dot product. |

Stack Manipulation

push + a

Opcode: 1

Pushes a onto the stack.

| old stack | new stack |

|---|---|

_ | _ a |

pop + n

Opcode: 3

Pops the n top elements from the stack.

1 ⩽ n ⩽ 5.

n | old stack | new stack |

|---|---|---|

| 1 | _ a | _ |

| 2 | _ b a | _ |

| 3 | _ c b a | _ |

| 4 | _ d c b a | _ |

| 5 | _ e d c b a | _ |

divine + n

Opcode: 9

Pushes n non-deterministic elements a to the stack.

1 ⩽ n ⩽ 5.

This is part of the interface for Triton VM’s secret input; see also the section regarding non-determinism.

The name of the instruction is the verb (not the adjective) meaning “to discover by intuition or insight.”

n | old stack | new stack |

|---|---|---|

| 1 | _ | _ a |

| 2 | _ | _ b a |

| 3 | _ | _ c b a |

| 4 | _ | _ d c b a |

| 5 | _ | _ e d c b a |

pick + i

Opcode: 17

Moves the element indicated by i to the top of the stack.

0 ⩽ i < 16.

i | old stack | new stack |

|---|---|---|

| 0 | _ d c b a x | _ d c b a x |

| 1 | _ d c b x a | _ d c b a x |

| 2 | _ d c x b a | _ d c b a x |

| 3 | _ d x c b a | _ d c b a x |

| 4 | _ x d c b a | _ d c b a x |

| … | … | … |

place + i

Opcode: 25

Moves the top of the stack to the indicated position i.

0 ⩽ i < 16.

i | old stack | new stack |

|---|---|---|

| 0 | _ d c b a x | _ d c b a x |

| 1 | _ d c b a x | _ d c b x a |

| 2 | _ d c b a x | _ d c x b a |

| 3 | _ d c b a x | _ d x c b a |

| 4 | _ d c b a x | _ x d c b a |

| … | … | … |

dup + i

Opcode: 33

Duplicates the element ith stack element and pushes it onto the stack.

0 ⩽ i < 16.

i | old stack | new stack |

|---|---|---|

| 0 | _ e d c b a | _ e d c b a a |

| 1 | _ e d c b a | _ e d c b a b |

| 2 | _ e d c b a | _ e d c b a c |

| 3 | _ e d c b a | _ e d c b a d |

| 4 | _ e d c b a | _ e d c b a e |

| … | … | … |

swap + i

Opcode: 41

Swaps the ith stack element with the top of the stack.

0 ⩽ i < 16.

i | old stack | new stack |

|---|---|---|

| 0 | _ e d c b a | _ e a c b a |

| 1 | _ e d c b a | _ e d c a b |

| 2 | _ e d c b a | _ e d a b c |

| 3 | _ e d c b a | _ e a c b d |

| 4 | _ e d c b a | _ a d c b e |

| … | … | … |

Control Flow

halt

Opcode: 0

Solves the halting problem (if the instruction is reached). Indicates graceful shutdown of the VM.

The only legal instruction following instruction halt is halt.

It is only possible to prove correct execution of a program if the last executed instruction is

halt.

nop

Opcode: 8

Does nothing.

skiz

Opcode: 2

Pop the top of the stack and skip the next instruction if the popped element is zero.

“skiz” stands for “skip if zero”.

An alternative perspective for this instruction is “execute if non-zero”, or “execute if”, or even

just “if”.

The amount by which skiz increases the instruction pointer ip depends on both the top of the

stack and the size of the next instruction

in the program (not the next instruction that gets actually

executed).

| next instruction’s size | old stack | new stack | old ip | new ip |

|---|---|---|---|---|

| any | _ a with a ≠ 0 | _ | ip | ip+1 |

| single word | _ 0 | _ | ip | ip+2 |

| double word | _ 0 | _ | ip | ip+3 |

call + d

Opcode: 49

Push address pair (ip+2, d) to the jump stack, and jump to absolute address d.

old ip | new ip | old jump stack | new jump stack |

|---|---|---|---|

ip | d | _ | _ (ip+2, d) |

return

Opcode: 16

Pop one address pair off the jump stack and jump to that pair’s return address (which is the pair’s first element).

Executing this instruction with an empty jump stack crashes Triton VM.

old ip | new ip | old jump stack | new jump stack |

|---|---|---|---|

ip | o | _ (o, d) | _ |

recurse

Opcode: 24

Peek at the top address pair of the jump stack and jump to that pair’s destination address (which is the pair’s second element).

Executing this instruction with an empty jump stack crashes Triton VM.

old ip | new ip | old jump stack | new jump stack |

|---|---|---|---|

ip | d | _ (o, d) | _ (o, d) |

recurse_or_return

Opcode: 32

Like recurse if st5 ≠ st6, like return if st5 = st6.

Instruction recurse_or_return behaves – surprise! – either like instruction recurse

or like instruction return.

The (deterministic) decision which behavior to exhibit is made at runtime and depends on stack

elements st5 and st6, the stack elements at indices 5 and 6.

If st5 ≠ st6, then recurse_or_return acts like instruction recurse, else like

return.

The instruction is designed to facilitate loops using pointer equality as termination condition and

to play nicely with instructions merkle_step and

merkle_step_mem.

Executing this instruction with an empty jump stack crashes Triton VM.

old ip | new ip | old jump stack | new jump stack | |

|---|---|---|---|---|

st5 ≠ st6 | ip | d | _ (o, d) | _ (o, d) |

st5 = st6 | ip | o | _ (o, d) | _ |

assert

Opcode: 10

Pops a if a = 1, else crashes the virtual machine.

| old stack | new stack |

|---|---|

_ a | _ |

Memory Access

read_mem + n

Opcode: 57

Interprets the top of the stack, i.e., st0 as a pointer p into

RAM.

Reads consecutive values from RAM at address p and puts them onto the op

stack below st0.

Decrements the RAM pointer, i.e., the top of the stack, by n.

1 ⩽ n ⩽ 5.

Let the RAM at address p contain a, at address p+1 contain b, and so on.

Then, this instruction behaves as follows:

i | old stack | new stack |

|---|---|---|

1 | _ p | _ a p-1 |

2 | _ p+1 | _ b a p-1 |

3 | _ p+2 | _ c b a p-1 |

4 | _ p+3 | _ d c b a p-1 |

5 | _ p+4 | _ e d c b a p-1 |

write_mem + n

Opcode: 11

Interprets the top of the stack, i.e., st0 as a pointer p into

RAM.

Writes the stack’s n top-most values below st0, called , to RAM at the

address p+i, popping them.

Increments the RAM pointer, i.e., the top of the stack, by n.

1 ⩽ n ⩽ 5.

i | old stack | new stack | old RAM | new RAM |

|---|---|---|---|---|

1 | _ a p | _ p+1 | [] | [p: a] |

2 | _ b a p | _ p+2 | [] | [p: a, p+1: b] |

3 | _ c b a p | _ p+3 | [] | [p: a, p+1: b, p+2: c] |

4 | _ d c b a p | _ p+4 | [] | [p: a, p+1: b, p+2: c, p+3: d] |

5 | _ e d c b a p | _ p+5 | [] | [p: a, p+1: b, p+2: c, p+3: d, p+4: e] |

Hashing

hash

Opcode: 18

Hashes the stack’s ten top-most elements and puts the resulting digest onto the stack.

In more detail:

Pops the stack’s ten top-most elements, jihgfedcba.

The elements are reversed and one-padded to a length of 16, giving abcdefghij111111.

(This padding corresponds to the fixed-length input padding of Tip5;

see also section 2.5 of the Tip5 paper.)

The Tip5 permutation is applied to abcdefghij111111, resulting in αβγδεζηθικuvwxyz.

The first five elements of this result, i.e., αβγδε, are reversed and pushed onto the stack.

The new top of the stack is then εδγβα.

| old stack | new stack |

|---|---|

_ jihgfedcba | _ εδγβα |

assert_vector

Opcode: 26

Asserts equality of to for 0 ⩽ i ⩽ 4.

Crashes the VM if any pair is unequal.

Pops the 5 top-most stack elements.

| old stack | new stack |

|---|---|

_ edcba edcba | _ edcba |

sponge_init

Opcode: 40

Initializes (resets) the Sponge’s state. Must be the first Sponge instruction executed.

sponge_absorb

Opcode: 34

Absorbs the stack’s ten top-most elements into the Sponge state.

Crashes Triton VM if the Sponge state is not initialized.

| old stack | new stack |

|---|---|

_ jihgfedcba | _ |

sponge_absorb_mem

Opcode: 48

Absorbs the ten RAM elements at addresses p, p+1, … into the Sponge state.

Overwrites stack elements st1 through st4 with the first four absorbed elements.

Crashes Triton VM if the Sponge state is not initialized.

| old stack | new stack |

|---|---|

_ dcba p | _ hgfe (p+10) |

sponge_squeeze

Opcode: 56

Squeezes the Sponge and pushes the ten squeezed elements onto the stack.

Crashes Triton VM if the Sponge state is not initialized.

| old stack | new stack |

|---|---|

_ | _ zyxwvutsrq |

Base Field Arithmetic

add

Opcode: 42

Replaces the stack’s top two elements with their sum (as field elements).

| old stack | new stack |

|---|---|

_ b a | _ (a + b) |

addi + a

Opcode: 65

Adds the instruction’s argument a to the top of the stack.

| old stack | new stack |

|---|---|

_ b | _ (a + b) |

mul

Opcode: 50

Replaces the stack’s top two elements with their product (as field elements).

| old stack | new stack |

|---|---|

_ b a | _ (a·b) |

invert

Opcode: 64

Computes the multiplicative inverse (over the field) of the top of the stack. Crashes the VM if the top of the stack is 0.

| old stack | new stack |

|---|---|

_ a | _ (1/a) |

eq

Opcode: 58

Replaces the stack’s top two elements with 1 if they are equal, with 0 otherwise.

| old stack | new stack | |

|---|---|---|

a = b | _ b a | _ 1 |

a ≠ b | _ b a | _ 0 |

Bitwise Arithmetic

split

Opcode: 4

Decomposes the top of the stack into its lower 32 bits and its upper 32 bits. The lower 32 bits are the new top of the stack.

| old stack | new stack |

|---|---|

_ a | _ hi lo |

lt

Opcode: 6

“Less than” of the stack’s two top-most elements.

Crashes the VM if a or b is not u32.

| old stack | new stack |

|---|---|

_ b a | _ a<b |

and

Opcode: 14

Bitwise “and” of the stack’s two top-most elements.

Crashes the VM if a or b is not u32.

| old stack | new stack |

|---|---|

_ b a | _ a&b |

xor

Opcode: 22

Bitwise “exclusive or” of the stack’s two top-most elements.

Crashes the VM if a or b is not u32.

| old stack | new stack |

|---|---|

_ b a | _ a^b |

log_2_floor

Opcode: 12

The number of bits in a minus 1, i.e., .

Crashes the VM if a is 0 or not u32.

| old stack | new stack |

|---|---|

_ a | _ ⌊log₂(a)⌋ |

pow

Opcode: 30

The top of the stack to the power of the stack’s runner up.

Crashes the VM if exponent e is not u32.

| old stack | new stack |

|---|---|

_ e b | _ b**e |

div_mod

Opcode: 20

Division with remainder of numerator n by denominator d.

Guarantees the properties n == q·d + r and r < d.

Crashes the VM if n or d is not u32 or if d is 0.

| old stack | new stack |

|---|---|

_ d n | _ q r |

pop_count

Opcode: 28

Computes the hamming weight or “population count”

of the top of the stack, a.

Crashes the VM if a is not u32.

| old stack | new stack |

|---|---|

_ a | _ w |

Extension Field Arithmetic

xx_add

Opcode: 66

Replaces the top six elements of the stack with the sum of the two extension field elements encoded by the first and the second triple of stack elements.

| old stack | new stack |

|---|---|

_ z y x b c a | _ w v u |

xx_mul

Opcode: 74

Replaces the top six elements of the stack with the product of the two extension field elements encoded by the first and the second triple of stack elements.

| old stack | new stack |

|---|---|

_ z y x b c a | _ w v u |

x_invert

Opcode: 72

Inverts the extension field element encoded by field elements z y x in-place.

Crashes the VM if the extension field element is 0.

| old stack | new stack |

|---|---|

_ z y x | _ w v u |

xb_mul

Opcode: 82

Replaces the stack’s top four elements with the scalar product of the top of the stack with the

extension field element encoded by stack elements st1 through st3.

| old stack | new stack |

|---|---|

_ z y x a | _ w v u |

Input/Output

read_io + n

Opcode: 73

Reads n elements from standard input and pushes them onto the stack.

1 ⩽ n ⩽ 5.

n | old stack | new stack |

|---|---|---|

| 1 | _ | _ a |

| 2 | _ | _ b a |

| 3 | _ | _ c b a |

| 4 | _ | _ d c b a |

| 5 | _ | _ e d c b a |

write_io + n

Opcode: 19

Pops the top n elements from the stack and writes them to standard output.

1 ⩽ n ⩽ 5.

n | old stack | new stack |

|---|---|---|

| 1 | _ a | _ |

| 2 | _ b a | _ |

| 3 | _ c b a | _ |

| 4 | _ d c b a | _ |

| 5 | _ e d c b a | _ |

Many-In-One

merkle_step

Opcode: 36

Helps traversing a Merkle tree during authentication path verification.

The mechanics are as follows.

The 6th element of the stack i (also referred to as st5) is taken as the node index for a Merkle

tree that is claimed to include data whose digest is the content of stack registers st4 through

st0, i.e., edcba.

The sibling digest of edcba is εδγβα and is read from the

input interface of secret data.

The least-significant bit of i indicates whether edcba is the digest of a left leaf or a right

leaf of the Merkle tree’s current level.

Depending on this least significant bit of i, merkle_step either

- (

i= 0 mod 2) interpretsedcbaas the left digest,εδγβαas the right digest, or - (

i= 1 mod 2) interpretsεδγβαas the left digest,edcbaas the right digest.

In either case,

- the left and right digests are hashed, and the resulting digest

zyxwvreplaces the top of the stack, and - 6th register

iis shifted by 1 bit to the right, i.e., the least-significant bit is dropped.

In conjunction with instructions recurse_or_return and

assert_vector, instruction merkle_step and instruction

merkle_step_mem allow efficient verification of a Merkle authentication path.

Crashes the VM if i is not u32.

| old stack | new stack |

|---|---|

_ i edcba | _ (i div 2) zyxwv |

merkle_step_mem

Opcode: 44

Helps traversing a Merkle tree during authentication path verification with the authentication path being supplied in RAM.

This instruction works very similarly to instruction merkle_step.

The main difference, as the name suggests, is the source of the sibling digest:

Instead of reading it from the input interface of secret data, it is supplied via RAM.

Stack element st7 is taken as the RAM pointer, holding the memory address at which the next

sibling digest is located in RAM.

Executing instruction merkle_step_mem increments the memory pointer by the length of one digest,

anticipating an authentication path that is laid out continuously.

Stack element st6 does not change when executing instruction merkle_step_mem in order to

facilitate instruction recurse_or_return.

This instruction allows verifiable re-use of an authentication path. This is necessary, for example, when verifiably updating a Merkle tree: first, the authentication path is used to confirm inclusion of some old leaf, and then to compute the tree’s new root from the new leaf.

Crashes the VM if i is not u32.

| old stack | new stack |

|---|---|

_ p f i edcba | _ p+5 f (i div 2) zyxwv |

xx_dot_step

Opcode: 80

Reads two extension field elements from RAM located at the addresses corresponding to the two top

stack elements, multiplies the extension field elements, and adds the product (p0, p1, p2) to an

accumulator located on stack immediately below the two pointers.

Also, increases the pointers by the number of words read.

This instruction facilitates efficient computation of the dot product of two vectors containing extension field elements.

| old stack | new stack |

|---|---|

_ z y x *b *a | _ z+p2 y+p1 x+p0 *b+3 *a+3 |

xb_dot_step

Opcode: 88

Reads one base field element from RAM located at the addresses corresponding to the top of the

stack, one extension field element from RAM located at the address of the second stack element,

multiplies the field elements, and adds the product (p0, p1, p2) to an accumulator located on

stack immediately below the two pointers.

Also, increase the pointers by the number of words read.

This instruction facilitates efficient computation of the dot product of a vector containing base field elements with a vector containing extension field elements.

| old stack | new stack |

|---|---|

_ z y x *b *a | _ z+p2 y+p1 x+p0 *b+3 *a+1 |

Arithmetization

An arithmetization defines two things:

- algebraic execution tables (AETs), and

- arithmetic intermediate representation (AIR) constraints.

For Triton VM, the execution trace is spread over multiple tables.

These tables are linked by various cryptographic arguments.

This division allows for a separation of concerns.

For example, the main processor’s trace is recorded in the Processor Table.

The main processor can delegate the execution of somewhat-difficult-to-arithmetize instructions like hash or xor to a co-processor.

The arithmetization of the co-processor is largely independent from the main processor and recorded in its separate trace.

For example, instructions relating to hashing are executed by the hash co-processor.

Its trace is recorded in the Hash Table.

Similarly, bitwise instructions are executed by the u32 co-processor, and the corresponding trace recorded in the U32 Table.

Another example for the separation of concerns is memory consistency, the bulk of which is delegated to the Operational Stack Table, RAM Table, and Jump Stack Table.

Algebraic Execution Tables

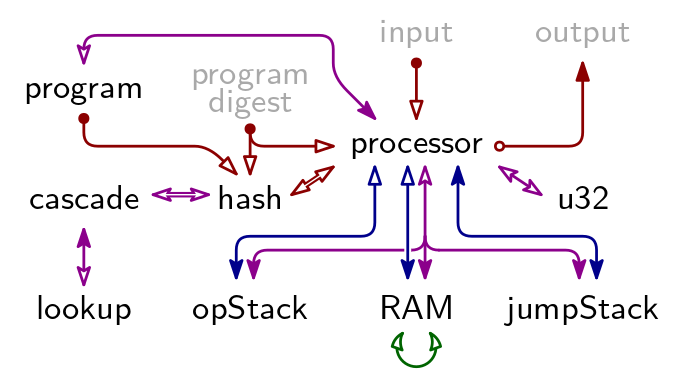

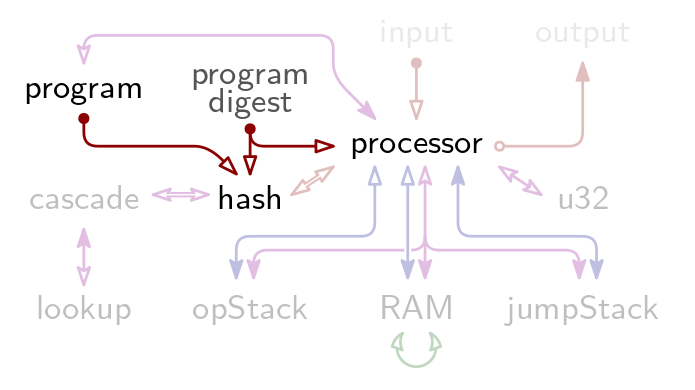

There are 9 Arithmetic Execution Tables in TritonVM. Their relation is described by below figure. A a blue arrow indicates a Permutation Argument, a red arrow indicates an Evaluation Argument, a purple arrow indicates a Lookup Argument, and the green arrow is the Contiguity Argument.

The grayed-out elements “program digest”, “input”, and “output” are not AETs but publicly available information. Together, they constitute the claim for which Triton VM produces a proof. See “Arguments Using Public Information” and “Program Attestation” for the Evaluation Arguments with which they are linked into the rest of the proof system.

Main Tables

The values of all registers, and consequently the elements on the stack, in memory, and so on, are elements of the B-field, i.e., where is the Oxfoi prime, . All values of columns corresponding to one such register are elements of the B-Field as well. Together, these columns are referred to as table’s main columns, and make up the main table.

Auxiliary Tables

The entries of a table’s columns corresponding to Permutation, Evaluation, and Lookup Arguments are elements from the X-field . These columns are referred to as a table’s auxiliary columns, both because the entries are elements of the X-field and because the entries can only be computed using the main tables, through an auxiliary process. Together, these columns are referred to as a table’s auxiliary columns, and make up the auxiliary table.

Padding

For reasons of computational efficiency, it is beneficial that an Algebraic Execution Table’s height equals a power of 2. To this end, tables are padded. The height of the longest AET determines the padded height for all tables, which is .

Arithmetic Intermediate Representation

For each table, up to four lists containing constraints of different type are given:

- Initial Constraints, defining values in a table’s first row,

- Consistency Constraints, establishing consistency within any given row,

- Transition Constraints, establishing the consistency of two consecutive rows in relation to each other, and

- Terminal Constraints, defining values in a table’s last row.

Together, all these constraints constitute the AIR constraints.

Arguments Using Public Information

Triton VM uses a number of public arguments. That is, one side of the link can be computed by the verifier using publicly available information; the other side of the link is verified through one or more AIR constraints.

The two most prominent instances of this are public input and output: both are given to the verifier explicitly. The verifier can compute the terminal of an Evaluation Argument using public input (respectively, output). In the Processor Table, AIR constraints guarantee that the used input (respectively, output) symbols are accumulated similarly. Comparing the two terminal values establishes that use of public input (respectively, production of public output) was integral.

The third public argument establishes correctness of the Lookup Table, and is explained there. The fourth public argument relates to program attestation, and is also explained on its corresponding page.

Arithmetization Overview

Tables

| table name | #main cols | #aux cols | total width |

|---|---|---|---|

| ProgramTable | 7 | 3 | 16 |

| ProcessorTable | 39 | 11 | 72 |

| OpStackTable | 4 | 2 | 10 |

| RamTable | 7 | 6 | 25 |

| JumpStackTable | 5 | 2 | 11 |

| HashTable | 67 | 20 | 127 |

| CascadeTable | 6 | 2 | 12 |

| LookupTable | 4 | 2 | 10 |

| U32Table | 10 | 1 | 13 |

| DegreeLowering (-/8/4) | 0/118/230 | 0/14/38 | 0/160/344 |

| Randomizers | 0 | 1 | 3 |

| TOTAL | 149/267/379 | 50/64/88 | 299/459/643 |

Constraints

The following table captures the state of affairs in terms of constraints before automatic degree lowering. In particular, automatic degree lowering introduces new columns, modifies the constraint set (in a way that is equivalent to what was there before), and lowers the constraints’ maximal degree.

Before automatic degree lowering:

| table name | #initial | #consistency | #transition | #terminal | max degree |

|---|---|---|---|---|---|

| ProgramTable | 6 | 4 | 10 | 2 | 4 |

| ProcessorTable | 29 | 10 | 41 | 1 | 19 |

| OpStackTable | 3 | 0 | 5 | 0 | 4 |

| RamTable | 7 | 0 | 12 | 1 | 5 |

| JumpStackTable | 6 | 0 | 6 | 0 | 5 |

| HashTable | 22 | 45 | 47 | 2 | 9 |

| CascadeTable | 2 | 1 | 3 | 0 | 4 |

| LookupTable | 3 | 1 | 4 | 1 | 3 |

| U32Table | 1 | 15 | 22 | 2 | 12 |

| Grand Cross-Table Argument | 0 | 0 | 0 | 14 | 1 |

| TOTAL | 79 | 76 | 150 | 23 | 19 |

| (# nodes) | (534) | (624) | (6679) | (213) |

After lowering degree to 8:

| table name | #initial | #consistency | #transition | #terminal |

|---|---|---|---|---|

| ProgramTable | 6 | 4 | 10 | 2 |

| ProcessorTable | 29 | 10 | 165 | 1 |

| OpStackTable | 3 | 0 | 5 | 0 |

| RamTable | 7 | 0 | 12 | 1 |

| JumpStackTable | 6 | 0 | 6 | 0 |

| HashTable | 22 | 46 | 49 | 2 |

| CascadeTable | 2 | 1 | 3 | 0 |

| LookupTable | 3 | 1 | 4 | 1 |

| U32Table | 1 | 18 | 24 | 2 |

| Grand Cross-Table Argument | 0 | 0 | 0 | 14 |

| TOTAL | 79 | 80 | 278 | 23 |

| (# nodes) | (534) | (635) | (6956) | (213) |

After lowering degree to 4:

| table name | #initial | #consistency | #transition | #terminal |

|---|---|---|---|---|

| ProgramTable | 6 | 4 | 10 | 2 |

| ProcessorTable | 31 | 10 | 238 | 1 |

| OpStackTable | 3 | 0 | 5 | 0 |

| RamTable | 7 | 0 | 13 | 1 |

| JumpStackTable | 6 | 0 | 7 | 0 |

| HashTable | 22 | 52 | 84 | 2 |

| CascadeTable | 2 | 1 | 3 | 0 |

| LookupTable | 3 | 1 | 4 | 1 |

| U32Table | 1 | 26 | 34 | 2 |

| Grand Cross-Table Argument | 0 | 0 | 0 | 14 |

| TOTAL | 81 | 94 | 398 | 23 |

| (# nodes) | (538) | (676) | (7246) | (213) |

Triton Assembly Constraint Evaluation

Triton VM’s recursive verifier needs to evaluate Triton VM’s AIR constraints. In order to gauge the runtime cost for this step, the following table provides estimates for that step’s contribution to various tables.

| Type | Processor | Op Stack | RAM |

|---|---|---|---|

| static | 33346 | 61505 | 24555 |

| dynamic | 44731 | 69099 | 28350 |

Opcode Pressure

When changing existing or introducing new instructions, one consideration is: how many other instructions compete for opcodes in the same instruction category? The table below helps answer this question at a glance.

| IsU32 | ShrinksStack | HasArg | Num Opcodes |

|---|---|---|---|

| n | n | n | 12 |

| n | n | y | 10 |

| n | y | n | 11 |

| n | y | y | 3 |

| y | n | n | 6 |

| y | n | y | 0 |

| y | y | n | 4 |

| y | y | y | 0 |

Maximum number of opcodes per row is 16.

Program Table

The Program Table contains the entire program as a read-only list of instruction opcodes and their arguments.

The processor looks up instructions and arguments using its instruction pointer ip.

For program attestation, the program is padded and sent to the Hash Table in chunks of size 10, which is the of the Tip5 hash function. Program padding is one 1 followed by the minimal number of 0’s necessary to make the padded input length a multiple of the 1.

Main Columns

The Program Table consists of 7 main columns. Those columns marked with an asterisk (*) are only used for program attestation.

| Column | Description |

|---|---|

Address | an instruction’s address |

Instruction | the (opcode of the) instruction |

LookupMultiplicity | how often an instruction has been executed |

*IndexInChunk | Address modulo the Tip5 , which is 10 |

*MaxMinusIndexInChunkInv | the inverse-or-zero of |

*IsHashInputPadding | padding indicator for absorbing the program into the Sponge |

IsTablePadding | padding indicator for rows only required due to the dominating length of some other table |

Auxiliary Columns

A Lookup Argument with the Processor Table establishes that the processor has loaded the correct instruction (and its argument) from program memory.

To establish the program memory’s side of the Lookup Argument, the Program Table has auxiliary column InstructionLookupServerLogDerivative.

For sending the padded program to the Hash Table, a combination of two Evaluation Arguments is used.

The first, PrepareChunkRunningEvaluation, absorbs one chunk of (i.e. 10) instructions at a time, after which it is reset and starts absorbing again.

The second, SendChunkRunningEvaluation, absorbs one such prepared chunk every 10 instructions.

Padding

A padding row is a copy of the Program Table’s last row with the following modifications:

- column

Addressis increased by 1, - column

Instructionis set to 0, - column

LookupMultiplicityis set to 0, - column

IndexInChunkis set toAddressmod , - column

MaxMinusIndexInChunkInvis set to the inverse-or-zero of , - column

IsHashInputPaddingis set to 1, and - column

IsTablePaddingis set to 1.

Above procedure is iterated until the necessary number of rows have been added.

A Note on the Instruction Lookup Argument

For almost all table-linking arguments, the initial row contains the argument’s initial value after having applied the first update. For example, the initial row for a Lookup Argument usually contains for some and . As an exception, the Program Table’s instruction Lookup Argument always records 0 in the initial row.

Recall that the Lookup Argument is not just about the instruction, but also about the instruction’s argument if it has one, or the next instruction if it has none. In the Program Table, this argument (or the next instruction) is recorded in the next row from the instruction in question. Therefore, verifying correct application of the logarithmic derivative’s update rule requires access to both the current and the next row.

Out of all constraint types, only Transition Constraints have access to more than one row at a time. This implies that the correct application of the first update of the instruction Lookup Argument cannot be verified by an initial constraint. Therefore, the recorded initial value must be independent of the second row.

Consequently, the final value for the Lookup Argument is recorded in the first row just after the program description ends. This row is guaranteed to exist because of the mechanics for program attestation: the program has to be padded with at least one 1 before it is hashed.

Arithmetic Intermediate Representation

Let all household items (🪥, 🛁, etc.) be challenges, concretely evaluation points, supplied by the verifier. Let all fruit & vegetables (🥝, 🥥, etc.) be challenges, concretely weights to compress rows, supplied by the verifier. Both types of challenges are X-field elements, i.e., elements of .

Initial Constraints

- The

Addressis 0. - The

IndexInChunkis 0. - The indicator

IsHashInputPaddingis 0. - The

InstructionLookupServerLogDerivativeis 0. PrepareChunkRunningEvaluationhas absorbedInstructionwith respect to challenge 🪑.SendChunkRunningEvaluationis 1.

Initial Constraints as Polynomials

AddressIndexInChunkIsHashInputPaddingInstructionLookupServerLogDerivativePrepareChunkRunningEvaluation - 🪑 - InstructionSendChunkRunningEvaluation - 1

Consistency Constraints

- The

MaxMinusIndexInChunkInvis zero or the inverse ofIndexInChunk. - The

IndexInChunkis or theMaxMinusIndexInChunkInvis the inverse ofIndexInChunk. - Indicator

IsHashInputPaddingis either 0 or 1. - Indicator

IsTablePaddingis either 0 or 1.

Consistency Constraints as Polynomials

(1 - MaxMinusIndexInChunkInv · (rate - 1 - IndexInChunk)) · MaxMinusIndexInChunkInv(1 - MaxMinusIndexInChunkInv · (rate - 1 - IndexInChunk)) · (rate - 1 - IndexInChunk)IsHashInputPadding · (IsHashInputPadding - 1)IsTablePadding · (IsTablePadding - 1)

Transition Constraints

- The

Addressincreases by 1. - If the

IndexInChunkis not , it increases by 1. Else, theIndexInChunkin the next row is 0. - The indicator

IsHashInputPaddingis 0 or remains unchanged. - The padding indicator

IsTablePaddingis 0 or remains unchanged. - If

IsHashInputPaddingis 0 in the current row and 1 in the next row, thenInstructionin the next row is 1. - If

IsHashInputPaddingis 1 in the current row thenInstructionin the next row is 0. - If

IsHashInputPaddingis 1 in the current row andIndexInChunkis in the current row thenIsTablePaddingis 1 in the next row. - If the current row is not a padding row, the logarithmic derivative accumulates the current row’s address, the current row’s instruction, and the next row’s instruction with respect to challenges 🥝, 🥥, and 🫐 and indeterminate 🪥 respectively. Otherwise, it remains unchanged.

- If the

IndexInChunkin the current row is not , thenPrepareChunkRunningEvaluationabsorbs theInstructionin the next row with respect to challenge 🪑. Otherwise,PrepareChunkRunningEvaluationresets and absorbs theInstructionin the next row with respect to challenge 🪑. - If the next row is not a padding row and the

IndexInChunkin the next row is , thenSendChunkRunningEvaluationabsorbsPrepareChunkRunningEvaluationin the next row with respect to variable 🪣. Otherwise, it remains unchanged.

Transition Constraints as Polynomials

Address' - Address - 1MaxMinusIndexInChunkInv · (IndexInChunk' - IndexInChunk - 1)

+ (1 - MaxMinusIndexInChunkInv · (rate - 1 - IndexInChunk)) · IndexInChunk'IsHashInputPadding · (IsHashInputPadding' - IsHashInputPadding)IsTablePadding · (IsTablePadding' - IsTablePadding)(IsHashInputPadding - 1) · IsHashInputPadding' · (Instruction' - 1)IsHashInputPadding · Instruction'IsHashInputPadding · (1 - MaxMinusIndexInChunkInv · (rate - 1 - IndexInChunk)) · IsTablePadding'(1 - IsHashInputPadding) · ((InstructionLookupServerLogDerivative' - InstructionLookupServerLogDerivative) · (🪥 - 🥝·Address - 🥥·Instruction - 🫐·Instruction') - LookupMultiplicity)

+ IsHashInputPadding · (InstructionLookupServerLogDerivative' - InstructionLookupServerLogDerivative)(rate - 1 - IndexInChunk) · (PrepareChunkRunningEvaluation' - 🪑·PrepareChunkRunningEvaluation - Instruction')

+ (1 - MaxMinusIndexInChunkInv · (rate - 1 - IndexInChunk)) · (PrepareChunkRunningEvaluation' - 🪑 - Instruction')(IsTablePadding' - 1) · (1 - MaxMinusIndexInChunkInv' · (rate - 1 - IndexInChunk')) · (SendChunkRunningEvaluation' - 🪣·SendChunkRunningEvaluation - PrepareChunkRunningEvaluation')

+ (SendChunkRunningEvaluation' - SendChunkRunningEvaluation) · IsTablePadding'

+ (SendChunkRunningEvaluation' - SendChunkRunningEvaluation) · (rate - 1 - IndexInChunk')

Terminal Constraints

- The indicator

IsHashInputPaddingis 1. - The

IndexInChunkis or the indicatorIsTablePaddingis 1.

Terminal Constraints as Polynomials

IsHashInputPadding - 1(rate - 1 - IndexInChunk) · (IsTablePadding - 1)

-

See also section 2.5 “Fixed-Length versus Variable-Length” in the Tip5 paper. ↩

Processor Table

The Processor Table records all of Triton VM states during execution of a particular program.

The states are recorded in chronological order.

The first row is the initial state, the last (non-padding) row is the terminal state, i.e., the state after having executed instruction halt.

It is impossible to generate a valid proof if the instruction executed last is anything but halt.

It is worth highlighting the initialization of the operational stack.

Stack elements st0 through st10 are initially 0.

However, stack elements st11 through st15, i.e., the very bottom of the stack, are initialized with the hash digest of the program that is being executed.

This is primarily useful for recursive verifiers:

they can compare their own program digest to the program digest of the proof they are verifying.

This way, a recursive verifier can easily determine if they are actually recursing, or whether the proof they are checking was generated using an entirely different program.

A more detailed explanation of the mechanics can be found on the page about program attestation.

Main Columns

The processor consists of all registers defined in the Instruction Set Architecture. Each register is assigned a column in the processor table.

Auxiliary Columns

The Processor Table has the following auxiliary columns, corresponding to Evaluation Arguments, Permutation Arguments, and Lookup Arguments:

RunningEvaluationStandardInputfor the Evaluation Argument with the input symbols.RunningEvaluationStandardOutputfor the Evaluation Argument with the output symbols.InstructionLookupClientLogDerivativefor the Lookup Argument with the Program TableRunningProductOpStackTablefor the Permutation Argument with the Op Stack Table.RunningProductRamTablefor the Permutation Argument with the RAM Table. Note that virtual columninstruction_typeholds value 1 for reads and 0 for writes.RunningProductJumpStackTablefor the Permutation Argument with the Jump Stack Table.RunningEvaluationHashInputfor the Evaluation Argument with the Hash Table for copying the input to the hash function from the processor to the hash coprocessor.RunningEvaluationHashDigestfor the Evaluation Argument with the Hash Table for copying the hash digest from the hash coprocessor to the processor.RunningEvaluationSpongefor the Evaluation Argument with the Hash Table for copying the 10 next to-be-absorbed elements from the processor to the hash coprocessor or the 10 next squeezed elements from the hash coprocessor to the processor, depending on the instruction.U32LookupClientLogDerivativefor the Lookup Argument with the U32 Table.ClockJumpDifferenceLookupServerLogDerivativefor the Lookup Argument of clock jump differences with the Op Stack Table, the RAM Table, and the Jump Stack Table.

Permutation Argument with the Op Stack Table

The subset Permutation Argument with the Op Stack Table RunningProductOpStackTable establishes consistency of the op stack underflow memory.

The number of factors incorporated into the running product at any given cycle depends on the executed instruction in this cycle:

for every element pushed to or popped from the stack, there is one factor.

Namely, if the op stack grows, every element spilling from st15 into op stack underflow memory will be incorporated as one factor;

and if the op stack shrinks, every element from op stack underflow memory being transferred into st15 will be one factor.

Notably, if an instruction shrinks the op stack by more than one element in a single clock cycle, each spilled element is incorporated as one factor. The same holds true for instructions growing the op stack by more than one element in a single clock cycle.

One key insight for this Permutation Argument is that the processor will always have access to the elements that are to be read from or written to underflow memory:

if the instruction grows the op stack, then the elements in question currently reside in the directly accessible, top part of the stack;

if the instruction shrinks the op stack, then the elements in question will be in the top part of the stack in the next cycle.

In either case, the Transition Constraint for the Permutation Argument can incorporate the explicitly listed elements as well as the corresponding trivial-to-compute op_stack_pointer.

Padding

A padding row is a copy of the Processor Table’s last row with the following modifications:

- column

clkis increased by 1, - column

IsPaddingis set to 1, - column

cjd_mulis set to 0,

A notable exception:

if the row with clk equal to 1 is a padding row, then the value of cjd_mul is not constrained in that row.

The reason for this exception is the lack of “awareness” of padding rows in the Jump Stack Table:

it keeps looking up clock jump differences in its padding section.

All these clock jumps are guaranteed to have magnitude 1.

Arithmetic Intermediate Representation

Let all household items (🪥, 🛁, etc.) be challenges, concretely evaluation points, supplied by the verifier. Let all fruit & vegetables (🥝, 🥥, etc.) be challenges, concretely weights to compress rows, supplied by the verifier. Both types of challenges are X-field elements, i.e., elements of .

Note, that the transition constraint’s use of some_column vs some_column_next might be a little unintuitive.

For example, take the following part of some execution trace.

| Clock Cycle | Current Instruction | st0 | … | st15 | Running Evaluation “To Hash Table” | Running Evaluation “From Hash Table” |

|---|---|---|---|---|---|---|

foo | 17 | … | 22 | |||

| hash | 17 | … | 22 | |||

bar | 1337 | … | 22 |

In order to verify the correctness of RunningEvaluationHashInput, the corresponding transition constraint needs to conditionally “activate” on row-tuple (, ), where it is conditional on ci_next (not ci), and verifies absorption of the next row, i.e., row .

However, in order to verify the correctness of RunningEvaluationHashDigest, the corresponding transition constraint needs to conditionally “activate” on row-tuple (, ), where it is conditional on ci (not ci_next), and verifies absorption of the next row, i.e., row .

Initial Constraints

- The cycle counter

clkis 0. - The instruction pointer

ipis 0. - The jump address stack pointer

jspis 0. - The jump address origin

jsois 0. - The jump address destination

jsdis 0. - The operational stack element

st0is 0. - The operational stack element

st1is 0. - The operational stack element

st2is 0. - The operational stack element

st3is 0. - The operational stack element

st4is 0. - The operational stack element

st5is 0. - The operational stack element

st6is 0. - The operational stack element

st7is 0. - The operational stack element

st8is 0. - The operational stack element

st9is 0. - The operational stack element

st10is 0. - The Evaluation Argument of operational stack elements

st11throughst15with respect to indeterminate 🥬 equals the public part of program digest challenge, 🫑. See program attestation for more details. - The

op_stack_pointeris 16. RunningEvaluationStandardInputis 1.RunningEvaluationStandardOutputis 1.InstructionLookupClientLogDerivativehas absorbed the first row with respect to challenges 🥝, 🥥, and 🫐 and indeterminate 🪥.RunningProductOpStackTableis 1.RunningProductRamTablehas absorbed the first row with respect to challenges 🍍, 🍈, 🍎, and 🌽 and indeterminate 🛋.RunningProductJumpStackTablehas absorbed the first row with respect to challenges 🍇, 🍅, 🍌, 🍏, and 🍐 and indeterminate 🧴.RunningEvaluationHashInputhas absorbed the first row with respect to challenges 🧄₀ through 🧄₉ and indeterminate 🚪 if the current instruction ishash. Otherwise, it is 1.RunningEvaluationHashDigestis 1.RunningEvaluationSpongeis 1.U32LookupClientLogDerivativeis 0.ClockJumpDifferenceLookupServerLogDerivativestarts having accumulated the first contribution.

Consistency Constraints

- The composition of instruction bits

ib0throughib6corresponds to the current instructionci. - The instruction bit

ib0is a bit. - The instruction bit

ib1is a bit. - The instruction bit

ib2is a bit. - The instruction bit

ib3is a bit. - The instruction bit

ib4is a bit. - The instruction bit

ib5is a bit. - The instruction bit

ib6is a bit. - The padding indicator

IsPaddingis either 0 or 1. - If the current padding row is a padding row and

clkis not 1, then the clock jump difference lookup multiplicity is 0.

Transition Constraints

Due to their complexity, instruction-specific constraints are defined in their own section. The following additional constraints also apply to every pair of rows.

- The cycle counter

clkincreases by 1. - The padding indicator

IsPaddingis 0 or remains unchanged. - If the next row is not a padding row, the logarithmic derivative for the Program Table absorbs the next row with respect to challenges 🥝, 🥥, and 🫐 and indeterminate 🪥. Otherwise, it remains unchanged.

- The running sum for the logarithmic derivative of the clock jump difference lookup argument accumulates the next row’s

clkwith the appropriate multiplicitycjd_mulwith respect to indeterminate 🪞. - The running product for the Jump Stack Table absorbs the next row with respect to challenges 🍇, 🍅, 🍌, 🍏, and 🍐 and indeterminate 🧴.

-

- If the current instruction in the next row is

hash, the running evaluation “Hash Input” absorbs the next row with respect to challenges 🧄₀ through 🧄₉ and indeterminate 🚪. - If the current instruction in the next row is

merkle_stepormerkle_step_memand helper variablehv5…- …is 0, the running evaluation “Hash Input” absorbs next row’s

st0throughst4andhv0throughhv4… - …is 1, the running evaluation “Hash Input” absorbs next row’s

hv0throughhv4andst0throughst4…

…with respect to challenges 🧄₀ through 🧄₉ and indeterminate 🚪.

- …is 0, the running evaluation “Hash Input” absorbs next row’s

- Otherwise, it remains unchanged.

- If the current instruction in the next row is

- If the current instruction is

hash,merkle_step, ormerkle_step_mem, the running evaluation “Hash Digest” absorbs the next row with respect to challenges 🧄₀ through 🧄₄ and indeterminate 🪟. Otherwise, it remains unchanged. - If the current instruction is

sponge_init, then the running evaluation “Sponge” absorbs the current instruction and the Sponge’s default initial state with respect to challenges 🧅 and 🧄₀ through 🧄₉ and indeterminate 🧽. Else if the current instruction issponge_absorb, then the running evaluation “Sponge” absorbs the current instruction and the current row with respect to challenges 🧅 and 🧄₀ through 🧄₉ and indeterminate 🧽. Else if the current instruction issponge_squeeze, then the running evaluation “Sponge” absorbs the current instruction and the next row with respect to challenges 🧅 and 🧄₀ through 🧄₉ and indeterminate 🧽. Else if the current instruction issponge_absorb_mem, then the running evaluation “Sponge” absorbs the opcode of instructionsponge_absorband stack elementsst1throughst4and all 6 helper variables with respect to challenges 🧅 and 🧄₀ through 🧄₉ and indeterminate 🧽. Otherwise, the running evaluation remains unchanged. -

- If the current instruction is

split, then the logarithmic derivative for the Lookup Argument with the U32 Table accumulatesst0andst1in the next row andciin the current row with respect to challenges 🥜, 🌰, and 🥑, and indeterminate 🧷. - If the current instruction is

lt,and,xor, orpow, then the logarithmic derivative for the Lookup Argument with the U32 Table accumulatesst0,st1, andciin the current row andst0in the next row with respect to challenges 🥜, 🌰, 🥑, and 🥕, and indeterminate 🧷. - If the current instruction is

log_2_floor, then the logarithmic derivative for the Lookup Argument with the U32 Table accumulatesst0andciin the current row andst0in the next row with respect to challenges 🥜, 🥑, and 🥕, and indeterminate 🧷. - If the current instruction is

div_mod, then the logarithmic derivative for the Lookup Argument with the U32 Table accumulates bothst0in the next row andst1in the current row as well as the constantsopcode(lt)and1with respect to challenges 🥜, 🌰, 🥑, and 🥕, and indeterminate 🧷.st0in the current row andst1in the next row as well asopcode(split)with respect to challenges 🥜, 🌰, and 🥑, and indeterminate 🧷.

- If the current instruction is

merkle_stepormerkle_step_mem, then the logarithmic derivative for the Lookup Argument with the U32 Table accumulatesst5from the current and next rows as well asopcode(split)with respect to challenges 🥜, 🌰, and 🥑, and indeterminate 🧷. - If the current instruction is

pop_count, then the logarithmic derivative for the Lookup Argument with the U32 Table accumulatesst0andciin the current row andst0in the next row with respect to challenges 🥜, 🥑, and 🥕, and indeterminate 🧷. - Else, i.e., if the current instruction is not a u32 instruction, the logarithmic derivative for the Lookup Argument with the U32 Table remains unchanged.

- If the current instruction is

Terminal Constraints

- In the last row, register “current instruction”

ciis 0, corresponding to instructionhalt.

Instruction Groups

Some transition constraints are shared across some, or even many instructions.

For example, most instructions must not change the jump stack.

Likewise, most instructions must not change RAM.

To simplify presentation of the instruction-specific transition constraints, these common constraints are grouped together and aliased.

Continuing above example, instruction group keep_jump_stack contains all transition constraints to ensure that the jump stack remains unchanged.

The next section treats each instruction group in detail. The following table lists and briefly explains all instruction groups.

| group name | description |

|---|---|

decompose_arg | instruction’s argument held in nia is binary decomposed into helper registers hv0 through hv3 |

prohibit_illegal_num_words | constrain the instruction’s argument n to 1 ⩽ n ⩽ 5 |

no_io | the running evaluations for public input & output remain unchanged |

no_ram | RAM is not being accessed, i.e., the running product of the Permutation Argument with the RAM Table remains unchanged |

keep_jump_stack | jump stack does not change, i.e., registers jsp, jso, and jsd do not change |

step_1 | jump stack does not change and instruction pointer ip increases by 1 |

step_2 | jump stack does not change and instruction pointer ip increases by 2 |

grow_op_stack | op stack elements are shifted down by one position, top element of the resulting stack is unconstrained |

grow_op_stack_by_any_of | op stack elements are shifted down by n positions, top n elements of the resulting stack are unconstrained, where n is the instruction’s argument |

keep_op_stack_height | the op stack height remains unchanged, and so in particular the running product of the Permutation Argument with the Op Stack table remains unchanged |

op_stack_remains_except_top_n | all but the top n elements of the op stack remain unchanged |

keep_op_stack | op stack remains entirely unchanged |

binary_operation | op stack elements starting from st2 are shifted up by one position, highest two elements of the resulting stack are unconstrained |

shrink_op_stack | op stack elements starting from st1 are shifted up by one position |

shrink_op_stack_by_any_of | op stack elements starting from stn are shifted up by one position, where n is the instruction’s argument |

The following instruction groups conceptually fit the category of ‘instruction groups’ but are not used or enforced through AIR constraints.

| group name | description |

|---|---|

no_hash | The hash coprocessor is not accessed. The constraints are implied by the evaluation argument with the Hash Table which takes the current instruction into account. |

A summary of all instructions and which groups they are part of is given in the following table.

| instruction | decompose_arg | prohibit_illegal_num_words | no_io | no_ram | keep_jump_stack | step_1 | step_2 | grow_op_stack | grow_op_stack_by_any_of | keep_op_stack_height | op_stack_remains_except_top_n | keep_op_stack | binary_operation | shrink_op_stack | shrink_op_stack_by_any_of |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

push + a | x | x | x | x | |||||||||||

pop + n | x | x | x | x | x | x | |||||||||

divine + n | x | x | x | x | x | x | |||||||||

pick + i | x | x | x | x | x | ||||||||||

place + i | x | x | x | x | x | ||||||||||

dup + i | x | x | x | x | x | ||||||||||

swap + i | x | x | x | x | x | ||||||||||

nop | x | x | x | x | 0 | x | |||||||||

skiz | x | x | x | x | |||||||||||

call + d | x | x | x | 0 | x | ||||||||||

return | x | x | x | 0 | x | ||||||||||

recurse | x | x | x | x | 0 | x | |||||||||

recurse_or_return | x | x | x | 0 | x | ||||||||||

assert | x | x | x | x | |||||||||||

halt | x | x | x | x | 0 | x | |||||||||

read_mem + n | x | x | x | x | |||||||||||

write_mem + n | x | x | x | x | |||||||||||

hash | x | x | x | ||||||||||||

assert_vector | x | x | x | ||||||||||||

sponge_init | x | x | x | ||||||||||||

sponge_absorb | x | x | x | ||||||||||||

sponge_absorb_mem | x | x | 5 | ||||||||||||

sponge_squeeze | x | x | x | ||||||||||||

add | x | x | x | ||||||||||||

addi + a | x | x | x | x | 1 | ||||||||||

mul | x | x | x | x | |||||||||||

invert | x | x | x | x | 1 | ||||||||||

eq | x | x | x | x | |||||||||||

split | x | x | x | ||||||||||||

lt | x | x | x | x | |||||||||||

and | x | x | x | x | |||||||||||

xor | x | x | x | x | |||||||||||

log_2_floor | x | x | x | x | 1 | ||||||||||

pow | x | x | x | x | |||||||||||

div_mod | x | x | x | x | 2 | ||||||||||

pop_count | x | x | x | x | 1 | ||||||||||

xx_add | x | x | x | ||||||||||||

xx_mul | x | x | x | ||||||||||||

x_invert | x | x | x | x | 3 | ||||||||||

xb_mul | x | x | x | ||||||||||||

read_io + n | x | x | x | x | x | ||||||||||

write_io + n | x | x | x | x | x | ||||||||||

merkle_step | x | x | x | 6 | |||||||||||

merkle_step_mem | x | x | 8 | ||||||||||||

xx_dot_step | x | x | 5 | ||||||||||||

xb_dot_step | x | x | 5 | x |

Indicator Polynomials ind_i(hv3, hv2, hv1, hv0)

In this and the following sections, a register marked with a ' refers to the next state of that register.

For example, st0' = st0 + 2 means that stack register st0 is incremented by 2.

An alternative view for the same concept is that registers marked with ' are those of the next row in the table.

For instructions like dup i, swap i, pop n, et cetera, it is beneficial to have polynomials that evaluate to 1 if the instruction’s argument is a specific value, and to 0 otherwise.

This allows indicating which registers are constraint, and in which way they are, depending on the argument.

This is the purpose of the indicator polynomials ind_i.

Evaluated on the binary decomposition of i, they show the behavior described above.

For example, take i = 13.

The corresponding binary decomposition is (hv3, hv2, hv1, hv0) = (1, 1, 0, 1).

Indicator polynomial ind_13(hv3, hv2, hv1, hv0) is defined as hv3·hv2·(1 - hv1)·hv0.

It evaluates to 1 on (1, 1, 0, 1), i.e., ind_13(1, 1, 0, 1) = 1.

Any other indicator polynomial, like ind_7, evaluates to 0 on (1, 1, 0, 1).

Likewise, the indicator polynomial for 13 evaluates to 0 for any other argument.

The list of all 16 indicator polynomials is:

ind_0(hv3, hv2, hv1, hv0) = (1 - hv3)·(1 - hv2)·(1 - hv1)·(1 - hv0)ind_1(hv3, hv2, hv1, hv0) = (1 - hv3)·(1 - hv2)·(1 - hv1)·hv0ind_2(hv3, hv2, hv1, hv0) = (1 - hv3)·(1 - hv2)·hv1·(1 - hv0)ind_3(hv3, hv2, hv1, hv0) = (1 - hv3)·(1 - hv2)·hv1·hv0ind_4(hv3, hv2, hv1, hv0) = (1 - hv3)·hv2·(1 - hv1)·(1 - hv0)ind_5(hv3, hv2, hv1, hv0) = (1 - hv3)·hv2·(1 - hv1)·hv0ind_6(hv3, hv2, hv1, hv0) = (1 - hv3)·hv2·hv1·(1 - hv0)ind_7(hv3, hv2, hv1, hv0) = (1 - hv3)·hv2·hv1·hv0ind_8(hv3, hv2, hv1, hv0) = hv3·(1 - hv2)·(1 - hv1)·(1 - hv0)ind_9(hv3, hv2, hv1, hv0) = hv3·(1 - hv2)·(1 - hv1)·hv0ind_10(hv3, hv2, hv1, hv0) = hv3·(1 - hv2)·hv1·(1 - hv0)ind_11(hv3, hv2, hv1, hv0) = hv3·(1 - hv2)·hv1·hv0ind_12(hv3, hv2, hv1, hv0) = hv3·hv2·(1 - hv1)·(1 - hv0)ind_13(hv3, hv2, hv1, hv0) = hv3·hv2·(1 - hv1)·hv0ind_14(hv3, hv2, hv1, hv0) = hv3·hv2·hv1·(1 - hv0)ind_15(hv3, hv2, hv1, hv0) = hv3·hv2·hv1·hv0

Group decompose_arg

Description

- The helper variables

hv0throughhv3are the binary decomposition of the instruction’s argument, which is held in registernia. - The helper variable

hv0is either 0 or 1. - The helper variable

hv1is either 0 or 1. - The helper variable

hv2is either 0 or 1. - The helper variable

hv3is either 0 or 1.

Polynomials

nia - (8·hv3 + 4·hv2 + 2·hv1 + hv0)hv0·(hv0 - 1)hv1·(hv1 - 1)hv2·(hv2 - 1)hv3·(hv3 - 1)

Group prohibit_illegal_num_words

Is only ever used in combination with instruction group decompose_arg.

Therefore, the instruction argument’s correct binary decomposition is known to be in helper variables hv0 through hv3.

Description

- The argument is not 0.

- The argument is not 6.

- The argument is not 7.

- The argument is not 8.

- The argument is not 9.

- The argument is not 10.

- The argument is not 11.

- The argument is not 12.

- The argument is not 13.

- The argument is not 14.

- The argument is not 15.

Polynomials

ind_0(hv3, hv2, hv1, hv0)ind_6(hv3, hv2, hv1, hv0)ind_7(hv3, hv2, hv1, hv0)ind_8(hv3, hv2, hv1, hv0)ind_9(hv3, hv2, hv1, hv0)ind_10(hv3, hv2, hv1, hv0)ind_11(hv3, hv2, hv1, hv0)ind_12(hv3, hv2, hv1, hv0)ind_13(hv3, hv2, hv1, hv0)ind_14(hv3, hv2, hv1, hv0)ind_15(hv3, hv2, hv1, hv0)

Group no_io

Description

- The running evaluation for standard input remains unchanged.

- The running evaluation for standard output remains unchanged.

Polynomials

RunningEvaluationStandardInput' - RunningEvaluationStandardInputRunningEvaluationStandardOutput' - RunningEvaluationStandardOutput

Group no_ram

Description

- The running product for the Permutation Argument with the RAM table does not change.

Polynomials

RunningProductRamTable' - RunningProductRamTable

Group keep_jump_stack

Description

- The jump stack pointer

jspdoes not change. - The jump stack origin

jsodoes not change. - The jump stack destination

jsddoes not change.

Polynomials

jsp' - jspjso' - jsojsd' - jsd

Group step_1

Contains all constraints from instruction group keep_jump_stack, and additionally:

Description

- The instruction pointer increments by 1.

Polynomials

ip' - (ip + 1)

Group step_2

Contains all constraints from instruction group keep_jump_stack, and additionally:

Description

- The instruction pointer increments by 2.

Polynomials

ip' - (ip + 2)

Group grow_op_stack

Description

- The stack element in

st0is moved intost1. - The stack element in

st1is moved intost2. - The stack element in

st2is moved intost3. - The stack element in

st3is moved intost4. - The stack element in

st4is moved intost5. - The stack element in

st5is moved intost6. - The stack element in

st6is moved intost7. - The stack element in

st7is moved intost8. - The stack element in

st8is moved intost9. - The stack element in

st9is moved intost10. - The stack element in

st10is moved intost11. - The stack element in

st11is moved intost12. - The stack element in

st12is moved intost13. - The stack element in

st13is moved intost14. - The stack element in

st14is moved intost15. - The op stack pointer is incremented by 1.

- The running product for the Op Stack Table absorbs the current row with respect to challenges 🍋, 🍊, 🍉, and 🫒 and indeterminate 🪤.

Polynomials

st1' - st0st2' - st1st3' - st2st4' - st3st5' - st4st6' - st5st7' - st6st8' - st7st9' - st8st10' - st9st11' - st10st12' - st11st13' - st12st14' - st13st15' - st14op_stack_pointer' - (op_stack_pointer + 1)RunningProductOpStackTable' - RunningProductOpStackTable·(🪤 - 🍋·clk - 🍊·ib1 - 🍉·op_stack_pointer - 🫒·st15)

Group grow_op_stack_by_any_of

Is only ever used in combination with instruction group decompose_arg.

Therefore, the instruction’s argument n correct binary decomposition is known to be in helper variables hv0 through hv3.

Description

- If

nis 1, thenst0is moved intost1

else ifnis 2, thenst0is moved intost2

else ifnis 3, thenst0is moved intost3

else ifnis 4, thenst0is moved intost4

else ifnis 5, thenst0is moved intost5. - If

nis 1, thenst1is moved intost2

else ifnis 2, thenst1is moved intost3

else ifnis 3, thenst1is moved intost4

else ifnis 4, thenst1is moved intost5

else ifnis 5, thenst1is moved intost6. - If

nis 1, thenst2is moved intost3

else ifnis 2, thenst2is moved intost4

else ifnis 3, thenst2is moved intost5

else ifnis 4, thenst2is moved intost6

else ifnis 5, thenst2is moved intost7. - If

nis 1, thenst3is moved intost4

else ifnis 2, thenst3is moved intost5

else ifnis 3, thenst3is moved intost6

else ifnis 4, thenst3is moved intost7

else ifnis 5, thenst3is moved intost8. - If

nis 1, thenst4is moved intost5

else ifnis 2, thenst4is moved intost6

else ifnis 3, thenst4is moved intost7

else ifnis 4, thenst4is moved intost8

else ifnis 5, thenst4is moved intost9. - If

nis 1, thenst5is moved intost6

else ifnis 2, thenst5is moved intost7

else ifnis 3, thenst5is moved intost8

else ifnis 4, thenst5is moved intost9

else ifnis 5, thenst5is moved intost10. - If

nis 1, thenst6is moved intost7

else ifnis 2, thenst6is moved intost8

else ifnis 3, thenst6is moved intost9

else ifnis 4, thenst6is moved intost10

else ifnis 5, thenst6is moved intost11. - If

nis 1, thenst7is moved intost8

else ifnis 2, thenst7is moved intost9

else ifnis 3, thenst7is moved intost10

else ifnis 4, thenst7is moved intost11

else ifnis 5, thenst7is moved intost12. - If

nis 1, thenst8is moved intost9

else ifnis 2, thenst8is moved intost10

else ifnis 3, thenst8is moved intost11

else ifnis 4, thenst8is moved intost12

else ifnis 5, thenst8is moved intost13. - If

nis 1, thenst9is moved intost10

else ifnis 2, thenst9is moved intost11

else ifnis 3, thenst9is moved intost12

else ifnis 4, thenst9is moved intost13

else ifnis 5, thenst9is moved intost14. - If

nis 1, thenst10is moved intost11

else ifnis 2, thenst10is moved intost12

else ifnis 3, thenst10is moved intost13

else ifnis 4, thenst10is moved intost14

else ifnis 5, thenst10is moved intost15. - If

nis 1, thenst11is moved intost12

else ifnis 2, thenst11is moved intost13

else ifnis 3, thenst11is moved intost14

else ifnis 4, thenst11is moved intost15

else ifnis 5, then the op stack pointer grows by 5. - If

nis 1, thenst12is moved intost13

else ifnis 2, thenst12is moved intost14

else ifnis 3, thenst12is moved intost15

else ifnis 4, then the op stack pointer grows by 4

else ifnis 5, then the running product with the Op Stack Table accumulatesst11throughst15. - If

nis 1, thenst13is moved intost14

else ifnis 2, thenst13is moved intost15

else ifnis 3, then the op stack pointer grows by 3

else ifnis 4, then the running product with the Op Stack Table accumulatesst12throughst15. - If

nis 1, thenst14is moved intost15

else ifnis 2, then the op stack pointer grows by 2

else ifnis 3, then the running product with the Op Stack Table accumulatesst13throughst15. - If

nis 1, then the op stack pointer grows by 1

else ifnis 2, then the running product with the Op Stack Table accumulatesst14andst15. - If

nis 1, then the running product with the Op Stack Table accumulatesst15.

Group keep_op_stack_height

The op stack pointer and the running product for the Permutation Argument with the Op Stack Table remain the same. In other words, there is no access (neither read nor write) from/to the Op Stack Table.

Description

- The op stack pointer does not change.

- The running product for the Op Stack Table remains unchanged.

Polynomials

op_stack_pointer' - op_stack_pointerRunningProductOpStackTable' - RunningProductOpStackTable

Group op_stack_remains_except_top_n_elements_unconstrained

Contains all constraints from group keep_op_stack_height, and additionally ensures that all but the top n op stack elements remain the same. (The top n elements are unconstrained.)

Description

- For

iin{n, ..., NUM_OP_STACK_REGISTERS-1}The stack element instidoes not change.

Polynomials

stn' - stn- …

stN' - stN